AlphaChip: How your next device's chip will be made by an AI

In this new episode we'll delve into AlphaChip an AI agent that will set a new standard in chip design

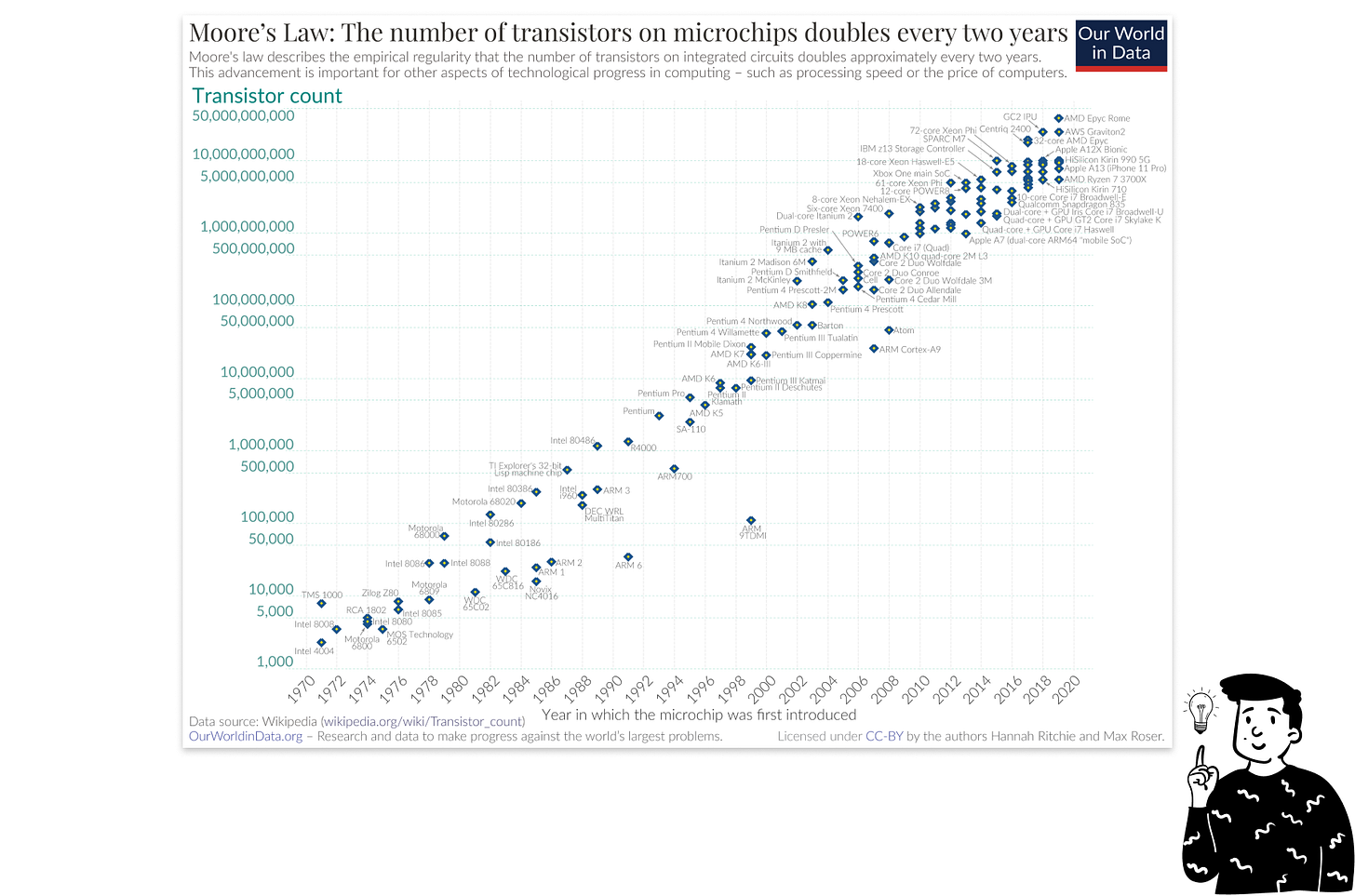

In the rapidly evolving world of artificial intelligence the performance of AI models heavily relies on the power and efficiency of underlying hardware. As demands for faster and more complex computations grow, so does the challenge of designing specialized computer chips that can keep up. For many years advancements in these chips (measured by the number of transistors) followed Moore’s law.

It states that the number of transistors on a microchip doubles approximately every two years, historically resulting in a doubling of computational power over the same period.

The figure above is a log-linear plot, where the number of transistors increases exponentially over time. If you draw a line through the data points they stay very close to it, suggesting a high correlation—though this does not imply causation—between time and transistor count.

However, as growth in computational power begins to slow, the AI industry faces a significant hurdle. Machine learning models require vast amounts of data and unprecedented levels of computational power. The challenge lies in designing microchips that can keep up with these demands.

In 2020 the DeepMind team published a paper titled “Chip Placement with Deep Reinforcement Learning” introducing a specialized AI agent called AlphaChip.

The objective of AlphaChip is to automate and optimize chip layout design. The model treats chip layout as a sequential decision-making process which Google reports has been highly effective in designing its latest TPUs.

The persistent challenges in chip design

Designing microchips requires balancing precision, optimization, and constraint management. Today most challenges revolve around optimizing the PPA requirements:

Power: The amount of electrical power the chip consumes.

Performance: How fast a chip can operate.

Area: The physical size of the silicon die.

To meet these requirements engineers typically adopt an iterative approach, making incremental adjustments over time. Although effective, this process is slow. AlphaChip aims to surpass human expertise in efficiency. Let’s explore two key elements driving AlphaChip’s design:

The placing elements process

The Graph Neural Networks (GNN)

The core of AlphaChip

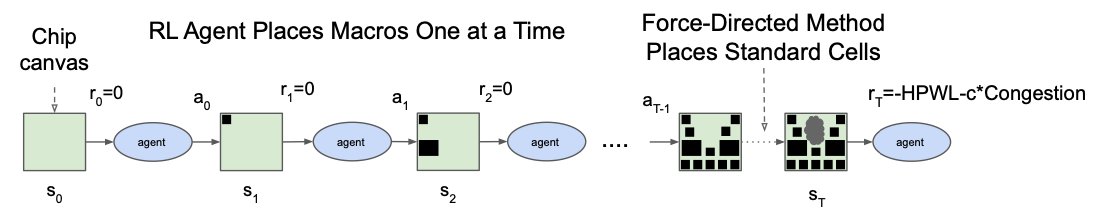

AlphaChip, like most DeepMind agents, is built using reinforcement learning. This technique treats the problem as a game, where components to be placed resemble puzzle pieces. Unlike a person solving a puzzle, AlphaChip doesn’t “see” the final image; it makes placements step-by-step to reach an optimal configuration.

The core of AlphaChip’s approach is the idea of sequential placement. This means that each placement affects the next. In technical terms AlphaChip’s RL algorithm operates within what’s known as a Markov Decision Process, a framework that allows the AI to “decide” the best action at each step based on the current state of the layout.

The RL process works as follows:

The chip canvas (state s at time t) represents the board where components are placed. At the start (t=0) the board is empty, giving the agent maximum freedom.

With each iteration, the agent places one component (or “macro”) on the canvas. Two elements define each placement:

The action at time t (known as a): contains all relevant information about the placement made in the current state.

The initial reward at time t (known as r): always set to 0 until the final iteration, as evaluation occurs only at the end of the process.

Once AlphaChip has finished its work at the final time state (t=T), it initiates another process called the Force-Directed Method for Standard Cells. This third-party algorithm is used to place smaller components within the remaining areas on the canvas, only after all components are placed the final reward is calculated. It’s important to note that providing a reward to the model is a core concept in reinforcement learning. (I’ll be publishing a post on this technique in the coming weeks, so stay tuned!) The calculation takes two factors into consideration:

HPWL (Half-Perimeter Wirelength): An approximation of total wire length required to connect all components.

Congestion: Measures how crowded certain layout areas are with signal routes. High congestion can lead to interference, slower communication, and power inefficiencies.

By assigning a negative reward AlphaChip’s objective becomes minimizing the total costs associated with HPWL and congestion. This approach encourages the agent to develop strategies that produce compact and efficient layouts. The ultimate goal is to create a design that optimizes key factors such as wire length, congestion, and density, all while meeting human-imposed performance standards.

Smarter Layouts with Graph Neural Networks

As we’ve seen, AlphaChip places critical elements on the canvas. But how does it differentiate components and manage relationships between them? Graph Neural Networks (GNNs) serve as AlphaChip’s “brain” for understanding interconnections that arise with each placement.

Each chip design can be represented as a graph, where nodes are components and edges represent connections between them. GNNs perform three main tasks:

Unique Node Embeddings: Each component (node) has unique characteristics like type, size, and connectivity needs. The GNN generates an embedding for each node, encapsulating these features.

Connectivity Awareness: Edges represent communication pathways. By incorporating this information, AlphaChip considers optimal placements, balancing proximity with the need for distance.

Comprehensive Layout Understanding: Processing the entire chip as a graph enables AlphaChip to evaluate the impact of each placement on the overall design.

By embedding component relationships and connectivity AlphaChip creates layouts that maximize performance, minimize congestion, and adapt to various chip designs. This sophisticated use of GNNs allows AlphaChip to generate highly optimized layouts capable of meeting the demands of modern AI applications.

Takeaways

Chip design is at a pivotal moment: As Moore’s Law slows, the need for efficient, AI-optimized chip layouts is at an all-time high.

AlphaChip leverages reinforcement learning: By treating chip layout as a game, AlphaChip automates the design process, aiming for optimal placements that human engineers can’t easily achieve.

Graph Neural Networks drive smarter layouts: AlphaChip uses GNNs to understand and manage relationships between components, ensuring strategic placements based on connectivity.

HPWL and congestion minimization are key: By minimizing wiring length and reducing congestion, AlphaChip creates compact, efficient designs that meet strict performance standards.

Transformative potential for tech industries: AlphaChip’s innovations in chip design have broad implications, offering faster, more powerful hardware that could redefine AI capabilities across industries.